AI Voice Cloning Is Here — 7 Ways to Protect Your Family from Scams in 2025

AI voice cloning scams are more convincing than ever. Here’s how to spot deepfake audio and protect your family from fraud in 2025.

Table of Contents

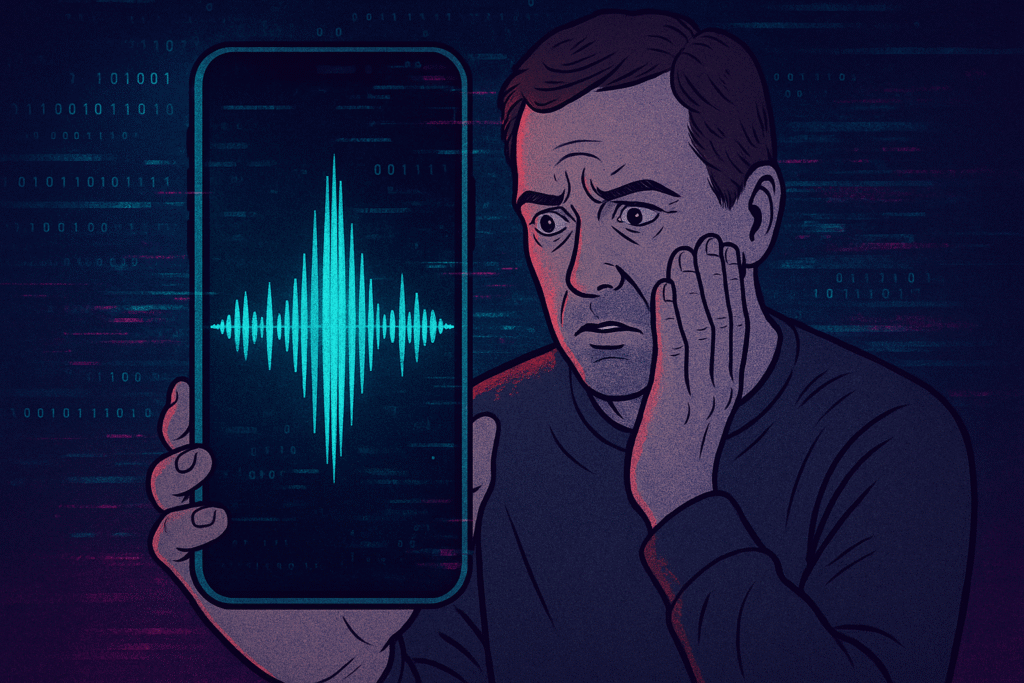

Imagine it’s the middle of the night. Your phone rings. Groggy, you answer—and hear the desperate voice of your teenager calling for help. Your heart stops. But when you hang up and dial back, you find your child safe at home. That shouldn’t happen – and yet it is. Scammers using AI voice cloning are now impersonating loved ones in family-emergency scams, and they sound scarily real. Every day more people report deepfake audio calls that fool them into panic, anger, or even handing over money. In one case, an Arizona mom got a ransom call demanding $1 million for her daughter (all made possible with voice cloning). Today, AI voice cloning is not sci-fi, it’s a tool in criminals’ hands. The big question is how we can voice cloning scam protection for our kids, parents, and ourselves

.

What Is AI Voice Cloning, Anyway?

AI voice cloning is like the audio version of deepfake video. Instead of faking a face, these tools copy a person’s voice. By feeding an AI just a short clip of someone talking – say, a few seconds from a TikTok video or voicemail – the software learns their tone, accent, and inflections. Then it can speak any words in their voice. In fact, experts say only a brief sample is needed to create an almost perfect voice replica. The process isn’t magic: it’s machine learning. The AI analyzes patterns in the original audio and reproduces them.

These voice cloning AI tools have legitimate uses – for example, authors using ElevenLabs to publish AI voice cloning services for audiobooks, or content creators dubbing videos in multiple languages. But the same tech can be misused. Criminals can grab clips of your family’s voices from social media or online videos (Instagram Reels, YouTube vlogs, etc.) and feed them into a cloning program. As one FTC alert explains: a scammer only needs “a short audio clip of your family member’s voice” from online to clone it. Even professional voice artists are warning that their voices can be “stolen” and repurposed by such AI.

In short, ai voice cloning means an AI can now talk like anyone, once it’s listened to a few seconds of them. It’s why experts say we should treat phone calls with more caution: you can’t trust the voice on the line anymore.

Why AI Voice Cloning is So Dangerous for Families

This is especially scary for families. Scammers often pretend to be your family – a child in trouble, a grandparent locked up, or a partner in an accident. The setup is classic: an urgent, panicked voice (that sounds just like your loved one) begs for money or help. Then the emotional pressure starts: threats, tears, orders not to call the police. In one widely reported incident, a Brooklyn couple got a late-night call from what sounded exactly like the husband’s mother – crying that she was kidnapped. They wound up sending hundreds of dollars via Venmo before realizing it was a scam. In another case, the DeStefano family in Arizona received a voice-cloning ransom demand for $1 million.

Why do these scams work so well? It’s partly psychology. When we hear a familiar voice – especially someone we love – our defenses drop. A security expert explains it bluntly: scammers “say things that trigger a fear-based emotional response, because when humans get afraid, we get stupid and don’t exercise the best judgment”. In other words, a crying child’s voice on the other end of the phone can overwhelm our logic.

The numbers back this up. The FBI reported seniors lost about $3.4 billion to various frauds in 2023, and it warned that AI has made those scams far more believable. Likewise, the FTC says Americans lost $2.7 billion to “imposter scams” last year, many involving convincing voices. In these family-emergency cons, criminals demand payment via untraceable methods (gift cards, wire transfers, cryptocurrency) – a huge red flag. But by the time victims realize the caller is fake, it’s often too late: money is gone and trust is shaken.

For families, the risk is two-fold. First, the scammers specifically target us: grandparents, parents, and kids are favorite targets because we worry so much about each other. Second, the method is underhanded and swift. In a split second we can be tricked. One public safety advisory bluntly warns: “A panicked call that sounds like your child can trigger instant action.” Indeed it can. That’s why recognizing and defending against voice cloning AI attacks is a top priority for anyone with loved ones.

7 Ways to Protect Your Family Right Now

Fortunately, there are simple steps anyone can take – no advanced tech skills needed. Think of these as commonsense rules for the AI era. Each one can help stop a scammer in their tracks:

- Create a Family Safe Word or Phrase. Agree on a funny or unique “password phrase” that you’ll use in emergencies. For example, Grandma and Grandpa could agree their grandkids will always mention “yellow duck bananas” in a real crisis. Choose something impossible to guess (no birthdays or pet names!) and keep it secret. Experts say this can be a highly effective defense: if a caller can’t correctly use your safe word, hang up immediately. (CBS reports security pros recommending this trick for just this reason.)

- Always Hang Up and Call Back. If someone calls claiming to be a family member in trouble, don’t give in to panic. Instead, hang up right away and dial their number from your contacts (or ask another trusted relative to call). Never trust the number on the caller ID (scammers can spoof that). The FTC explicitly advises: “Don’t trust the voice – call the person who supposedly contacted you and verify the story”. Real emergencies can wait the few minutes it takes to make a safe call. Example: if “Billy” calls screaming for bail money, hang up and call Billy on a number you know. If it’s really him, he’ll confirm. If it’s a scammer, they’ll hang up or refuse to verify – and that tells you everything you need to know.

- Talk With Older (and Younger) Family Members. Some of our parents or kids may not even realize AI voice cloning is a thing. Sit down and explain, in plain language, what to watch for. Tell Grandma and Grandpa: “We have a secret word between us. If I ever ask for money over the phone without it, you’ll know it’s a scam.” Let your teenagers know the same. Encourage everyone in the family to be suspicious of any urgent financial request over a call. Just knowing that such scams exist can break their power. Share news stories or ads from the FBI or FTC – for example, the FBI alert about criminals using cloned voices for kidnapping scams – so it feels real, not paranoid.

- Limit Voice and Video Posts Online. It may feel harmless to post voice notes, TikTok videos, or Instagram stories of your kids laughing or you singing in the shower. But thieves can grab those too. The FTC and others warn that even short clips of your voice on social media can be the raw material for cloning. Be mindful: the more you broadcast your or your family’s voice publicly, the easier it is to harvest. Check privacy settings – turn your profiles to “friends only” or “private” when possible. Think twice before posting audio or video of family members, especially children. (Yes, we love sharing baby’s first words, but maybe keep it between family.)

- Use a Call-Screening App or Feature. Modern phones have come a long way at detecting spam and scams. For example, Google Pixel phones now include AI “Scam Detection” that listens to a call in real time and warns you if it suspects fraud (say, a demand for gift cards). Apple’s iPhones have “Silence Unknown Callers,” and many carriers offer free scam-blocking apps (T-Mobile Scam Shield, Hiya, etc.). Turning on these features won’t catch every deepfake voice, but it can block or flag many imposter calls before you even pick up. Treat a “Scam Likely” label on your screen as a cue to be extra cautious.

- Report Suspicious Calls – It Helps Everyone. If you or a family member gets a really creepy call, don’t just brush it off. Report it to the authorities. Let your bank or credit union know what happened, and file a report with the FTC (visit ReportFraud.ftc.gov or call 1-877-FTC-HELP). You don’t have to have lost money to make a report – spotting a pattern is valuable in itself. When victims come forward, law enforcement can detect trends and take action on these scams. You’ll be helping protect others. (In fact, the FTC is actively collecting voice-cloning scam reports and even ran a “Voice Cloning Challenge” to find better defenses.)

- Keep Up with the Latest Scams. Scammers constantly change tactics as quickly as AI tech evolves. Stay informed by watching news outlets, government alerts, or your bank’s fraud advisories. For instance, the Identity Theft Resource Center noted a 148% jump in impersonation scams from April 2024 to March 2025 – a sign that voice scams are surging. Follow trusted sources (FTC, FBI, AARP, etc.) on social media or through newsletters so you hear right away if a new voice-scam trend appears. Talk about it at family dinners: a little prep can keep everyone safe.

By combining these steps – a secret family passphrase, a hang-up habit, open conversations, smart sharing online, tech tools, and vigilance – you build voice cloning scam protection around your household. None of these require you to become a tech wizard, just a little awareness and communication.

What About Legit Voice Cloning AI Tools?

You might wonder: isn’t voice cloning just a fad for cartoon villains? Actually, AI voice cloning services are booming in legitimate ways. Companies like ElevenLabs, Resemble AI, and others offer voice cloning AI tools for audiobooks, podcasts, ads, and more. For example, ElevenLabs recently rolled out a platform letting authors publish AI-generated audiobooks cheaply and easily. Major brands have used voice AI: Nike cloned NBA star Luka Dončić’s voice for an ad, and New York’s mayor even cloned his own voice in multiple languages to reach diverse communities. News outlets like The Atlantic and The Washington Post use AI narrators to create audio versions of articles. In medical or accessibility tech, voice cloning can preserve a person’s voice (for ALS patients, for example) or allow devices to speak in a more natural tone.

The key difference is consent and ethics. Legitimate services require permission (often your own voice recording) and have rules against impersonating others. They serve creativity and convenience: an author can narrate her book with her own voice, or create an affiliate’s voice clone to offer translations. Scams violate all that – they take your voice without permission. In fact, the FTC is working on rules to ban AI tools designed to clone real people’s voices for fraud.

So yes, voice cloning itself can be used for good – but sadly the same power can be abused. Think of it like a pocket knife: useful for cooking but dangerous in the wrong hands. Being aware of both sides lets you enjoy the benefits (cool AI apps, voice assistants) while staying alert for the tricks.

Final Thoughts: Stay Smart, Stay Safe

We’re not saying you should become paranoid every time the phone rings. But in the age of AI voice cloning, a dash of caution goes a long way. Treat any sudden, emotional plea on the phone with skepticism and step back. You and your family don’t need to guess or gamble – you just need a simple verification habit. Talk openly: remind everyone (kids and elders alike) that it’s okay to say no to odd calls, to hang up on requests for money, and to double-check identities. Reinforce that mom or dad will never call for Bitcoin out of the blue, and if in doubt, we call each other first.

Remember: you can outsmart these scams. By using a safe family phrase, verifying callers, limiting what you share online, and staying informed, you create a strong shield of voice cloning scam protection. In the end, the best defense is people watching out for people. Keep the conversation going, stay calm, and trust your gut. We’re all in this together – and together, we can keep our families safe from the frightening new wave of AI voice fraud.

Also Check Out Best Laptops for Students in 2025

FAQ

Can AI really clone a voice with just a few seconds of audio? Yes – surprisingly, it can. Modern voice-cloning tools only need a short clip to work. For example, news sources note that “AI can now clone a person’s voice using just a short audio sample”. In real scams, criminals have shown they can create a convincing fake voice from just a few seconds of you speaking. That’s why it’s important to assume any clear recording of your voice could be captured by AI.

Are there any free AI voice cloning services? There are demo versions and free tiers of some voice AI tools (like ElevenLabs or Resemble), often aimed at creators. These let you try cloning your own voice or select from a library of voices. However, those platforms typically require you to agree to rules (no impersonating others) and they monitor abuse. We mention them to point out the technology’s existence – but remember, any tool that can clone a voice can also be misused if someone uploads a loved one’s recording without permission. Always use such services responsibly and keep private voice clips off public sites.

How can I tell if a voice on the phone is a fake AI clone? It can be very hard to hear a difference. AI voices are getting more natural every day. That’s why it’s safest not to rely on sound alone. However, watch for other red flags: scam callers often use urgent, fear-based language (“Your daughter has been kidnapped!”) and demand unusual forms of payment like gift cards, wires, or cryptocurrency. They may refuse to answer simple personal questions that a real family member would know (like your safe word). You might also notice small glitches or unnatural pauses in an AI-generated voice. If any demand sounds odd – “Put $200 on a gift card, now!” – it’s almost certainly a scam. The golden rule: if it feels wrong, hang up and verify by calling the person back yourself.

Where can I get help or report a scam? If you suspect a voice cloning scam, tell your bank, local police, and report it to the FTC (at ReportFraud.ftc.gov or call 1-877-FTC-HELP). Reporting helps authorities track these crimes. You can also look for information from consumer protection sites like the FTC’s alerts or the FBI website. The more informed your family is, the better you can protect each other. Stay vigilant, and don’t hesitate to share what you learn – it could save someone else from falling victim.